Self-driving cars are revolutionizing transportation, navigating complex environments using a suite of sophisticated sensors. But how do these autonomous vehicles actually interpret the vast amounts of data they collect to understand the world around them? The answer lies in neural networks, specifically, deep neural networks (DNNs). Perception, as it’s known in the industry, is the crucial capability that allows these cars to process and identify road information – from traffic signals and pedestrians to the lanes they travel within. Artificial intelligence empowers driverless vehicles to achieve this perception, enabling them to react in real-time and navigate safely.

These remarkable abilities are achieved through the implementation of algorithms called deep neural networks (DNNs). Instead of relying on manually coded rules, such as “stop at a red light,” DNNs enable self-driving cars to learn and adapt to their surroundings using sensor data. These mathematical models are inspired by the human brain, learning through experience. By exposing a DNN to numerous images of stop signs under various conditions, it can learn to identify stop signs independently.

Two Pillars of Self-Driving Car Safety: Diversity and Redundancy in Neural Networks

A single algorithm is insufficient for the intricate task of autonomous driving. A comprehensive system relies on a diverse set of DNNs, each dedicated to a specific function, to ensure safe operation. These networks are not only diverse, handling tasks from reading road signs to identifying intersections and even detecting drivable paths, but also redundant, with overlapping capabilities to minimize the risk of system failure. The exact number of DNNs isn’t fixed and is constantly evolving as new capabilities are developed and integrated.

To translate the signals from these individual DNNs into driving actions, real-time processing is essential. This necessitates a powerful, centralized computing platform, such as NVIDIA DRIVE AGX.

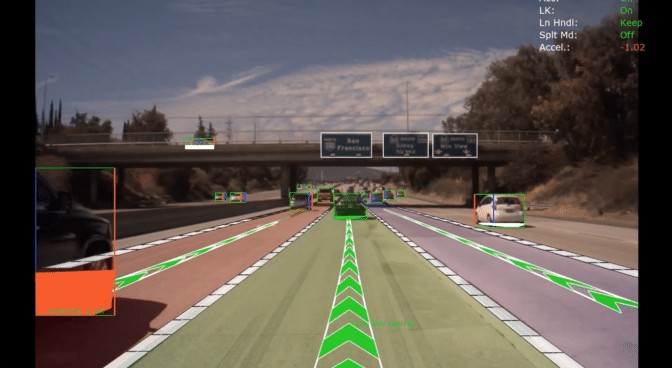

Below are examples of core DNNs utilized by NVIDIA for autonomous vehicle perception, illustrating the breadth and depth of neural programming required.

Pathfinding Neural Networks: Charting the Course

These DNNs are crucial for enabling the car to determine drivable areas and plan safe routes:

- OpenRoadNet: Identifies all drivable space surrounding the vehicle, regardless of lane markings.

- PathNet: Highlights the safe path ahead, even in the absence of clear lane markers.

- LaneNet: Detects lane lines and road markers to define the vehicle’s current path.

- MapNet: Identifies lanes and landmarks, contributing to the creation and maintenance of high-definition maps.

Pathfinding Neural Networks for Self-Driving Cars: A diagram illustrating how different Deep Neural Networks like OpenRoadNet, PathNet, and LaneNet collaborate to determine a safe driving path for autonomous vehicles, demonstrating a key aspect of how to write a neural program for self-driving car perception.

Pathfinding Neural Networks for Self-Driving Cars: A diagram illustrating how different Deep Neural Networks like OpenRoadNet, PathNet, and LaneNet collaborate to determine a safe driving path for autonomous vehicles, demonstrating a key aspect of how to write a neural program for self-driving car perception.

Object Detection and Classification Neural Networks: Perceiving the Environment

These DNNs are essential for detecting obstacles, traffic lights, and signs:

- DriveNet: Perceives other vehicles, pedestrians, traffic lights, and signs, though it doesn’t interpret light colors or sign types.

- LightNet: Classifies the state of traffic lights – red, yellow, or green.

- SignNet: Identifies the type of road sign – stop, yield, one way, etc.

- WaitNet: Detects situations requiring the vehicle to stop and wait, such as at intersections.

Expanding Neural Capabilities

The array of DNNs extends to monitoring vehicle status and enabling complex maneuvers:

- ClearSightNet: Monitors camera visibility, detecting conditions like rain, fog, and glare that can impair vision.

- ParkNet: Identifies available parking spaces.

These examples represent just a fraction of the DNNs that constitute the diverse and redundant DRIVE Software perception layer. To delve deeper into NVIDIA’s approach to autonomous driving software, explore the DRIVE Labs video series.