Self-driving cars are no longer a futuristic fantasy; they are rapidly becoming a reality. At the heart of this revolution lies artificial intelligence, specifically neural networks. These intricate algorithms empower autonomous vehicles to perceive and understand the complex world around them, transforming raw sensor data into actionable driving decisions. But how exactly do you write a neural program that enables a car to drive itself?

The secret lies in perception, the ability of a self-driving car to process and interpret road data in real time. This data, gathered from an array of sensors, including cameras, lidar, and radar, is overwhelming. Neural networks, particularly deep neural networks (DNNs), are the key to making sense of this information. Unlike traditional programming that relies on explicitly written rules, DNNs learn from experience. Inspired by the human brain, these mathematical models can identify patterns and make predictions based on vast amounts of training data. For instance, by being exposed to countless images of stop signs under various conditions, a DNN can learn to recognize a stop sign on its own, even if it’s partially obscured or viewed from an unusual angle.

Two Pillars of Autonomous Driving: Diversity and Redundancy in Neural Networks

Creating a safe and reliable self-driving system isn’t about deploying a single, monolithic algorithm. Instead, it necessitates a diverse and redundant set of DNNs, each meticulously designed for specific tasks. This approach ensures that no single point of failure can compromise the vehicle’s safety.

These networks are diverse, covering a wide spectrum of functionalities, from deciphering traffic signs and signals to identifying intersections and mapping drivable paths. They are also built with redundancy, meaning several DNNs might have overlapping capabilities. This overlap acts as a safety net, minimizing the risk of errors and ensuring consistent performance even if one network falters.

The exact number of DNNs in a self-driving system isn’t fixed; it’s a dynamic landscape that evolves with ongoing research and development. As autonomous driving technology advances, new capabilities and, consequently, new neural networks are continuously being added.

To translate the insights from these individual DNNs into driving actions, a high-performance computing platform is indispensable. Platforms like NVIDIA DRIVE AGX are designed to process the signals generated by these networks in real time, enabling the vehicle to react instantaneously to its environment.

Let’s explore some core examples of DNNs crucial for autonomous vehicle perception, drawing from the perception stack used in advanced systems.

Crafting Neural Networks for Pathfinding

Pathfinding DNNs are the navigational brains of a self-driving car, responsible for determining where the vehicle can drive and planning a safe route. Key examples include:

-

OpenRoadNet: This network is designed to identify all drivable space surrounding the vehicle, extending beyond lane markings to include adjacent lanes and open road areas. To write a program like OpenRoadNet, you’d focus on training a DNN to segment images or point clouds to distinguish drivable surfaces from non-drivable ones, regardless of lane constraints.

-

PathNet: PathNet focuses on highlighting the most viable path ahead, even in the absence of clear lane markers. Developing PathNet involves training a network to predict the continuous path based on visual cues and road geometry, crucial for navigating unmarked roads or construction zones.

-

LaneNet: This DNN specializes in detecting lane lines and other road markings that define the vehicle’s current lane. Programming LaneNet requires training a model to accurately identify and classify lane markings from sensor data, enabling the car to stay within its lane.

-

MapNet: Going beyond lane detection, MapNet identifies lanes and landmarks, contributing to the creation and continuous updating of high-definition maps. Creating MapNet involves training a network for simultaneous localization and mapping (SLAM), recognizing features that are both navigational cues and map elements.

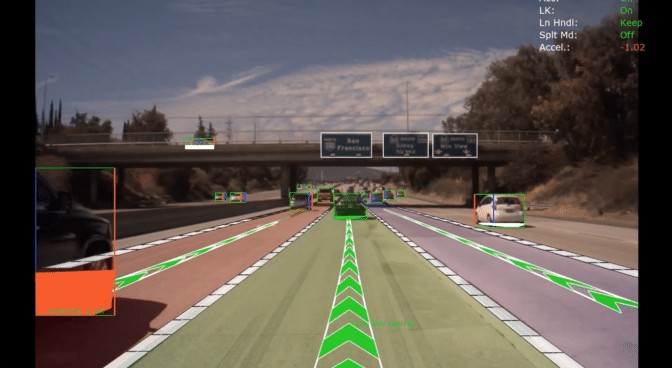

Path-finding DNNs working together to identify a safe driving route for an autonomous vehicle

Path-finding DNNs working together to identify a safe driving route for an autonomous vehicle

Developing Neural Networks for Object Detection and Classification

Object detection and classification DNNs are the eyes of a self-driving car, enabling it to perceive and understand other road users and traffic control elements. Examples include:

-

DriveNet: This comprehensive network perceives other vehicles, pedestrians, traffic lights, and signs. However, it doesn’t interpret the specific state of a traffic light or the type of sign. Writing DriveNet involves training a robust object detection model capable of identifying a wide range of road objects, providing a foundational layer for scene understanding.

-

LightNet: Complementing DriveNet, LightNet is specifically designed to classify the state of traffic lights – red, yellow, or green. Developing LightNet requires training a specialized network to accurately recognize and categorize traffic light signals, a critical component for safe intersection navigation.

-

SignNet: SignNet focuses on discerning the type of traffic signs – stop, yield, one way, etc. Programming SignNet involves training a network to classify different types of traffic signs, enabling the car to obey road regulations.

-

WaitNet: This DNN detects situations where the vehicle must stop and wait, such as at intersections or pedestrian crossings. Creating WaitNet involves training a network to recognize contextual cues and predict waiting conditions, ensuring the vehicle behaves appropriately in complex scenarios.

Expanding the Neural Network Suite

The array of neural networks extends beyond pathfinding and object detection, encompassing other critical aspects of autonomous driving:

-

ClearSightNet: This network monitors the visibility conditions of the vehicle’s cameras, detecting impairments like rain, fog, and glare. Developing ClearSightNet involves training a network to assess image quality and identify factors that might degrade sensor perception, allowing the system to adapt to varying weather conditions.

-

ParkNet: ParkNet identifies available parking spots, facilitating autonomous parking maneuvers. Writing ParkNet requires training a network to detect and classify parking spaces from sensor data, enabling the vehicle to perform automated parking.

These examples represent just a fraction of the diverse and redundant DNNs that constitute a comprehensive perception layer for autonomous driving. The development of neural programs for self-driving cars is a complex and multifaceted endeavor, requiring expertise in machine learning, computer vision, and robotics. It’s a field that’s continuously evolving, pushing the boundaries of AI and paving the way for a future of safer and more efficient transportation. To delve deeper into the world of autonomous driving software and explore the cutting edge of neural network applications, resources like NVIDIA DRIVE Labs offer valuable insights and demonstrations.