Self-driving cars, also known as autonomous or driverless cars, represent a paradigm shift in transportation. These vehicles navigate and operate without human intervention, relying on a sophisticated blend of sensors, cameras, radar, and, crucially, artificial intelligence (AI). For a car to be truly driverless, it must autonomously manage navigation to a pre-set destination, even on roads not specifically designed for autonomous vehicles. The promise of driverless cars includes reduced traffic congestion, fewer accidents, and the emergence of innovative transportation services like self-driving ride-hailing and trucking.

Leading the charge in developing and testing these autonomous marvels are companies like Audi, BMW, Ford, Google (Waymo), General Motors, Tesla, Volkswagen, and Volvo. Waymo, a subsidiary of Alphabet Inc., stands out with its extensive testing program, deploying fleets of self-driving cars, including models like the Toyota Prius and Audi TT, across hundreds of thousands of miles of diverse road conditions. But the fundamental question remains: how exactly are these driverless cars programmed to drive themselves?

The Core Programming Behind Autonomous Driving

The magic behind self-driving cars lies in their intricate programming, primarily driven by AI technologies. Developers leverage vast datasets from image recognition systems, coupled with machine learning (ML) and neural networks, to construct systems capable of autonomous navigation.

Neural networks are central to this process. They are designed to identify patterns within the immense data streams fed to them. This data originates from a suite of sensors, including:

- Radar: Detects objects and their distances using radio waves, functioning effectively in various weather conditions.

- Lidar (Light Detection and Ranging): Employs laser beams to measure distances, creating a detailed 3D map of the surroundings.

- Cameras: Capture visual information, enabling the system to “see” and interpret traffic signals, lane markings, and other visual cues.

These sensors act as the car’s eyes and ears, constantly gathering data about the environment. The neural network processes this raw sensory input, learning to recognize critical elements of a driving environment – traffic lights, pedestrians, road signs, lane markings, and potential obstacles.

Alt text: Diagram illustrating various sensors used in driverless cars, including lidar, radar, and cameras, positioned around the vehicle to perceive its surroundings.

This processed information allows the self-driving car to build a dynamic, real-time map of its environment. This map is not just a visual representation; it’s a comprehensive understanding of the car’s surroundings that informs path planning. The programming then dictates how the car determines the safest and most efficient route to its destination. This involves adhering to traffic laws, reacting to unexpected obstacles, and making split-second decisions that mimic human driving intuition – all based on lines of code and complex algorithms.

Furthermore, geofencing technology is often integrated into the programming. Geofencing uses GPS to establish virtual boundaries, ensuring the vehicle operates within predefined operational design domains (ODDs). This is particularly useful for managing autonomous fleets or ensuring vehicles adhere to specific geographical restrictions.

Waymo’s approach exemplifies this sensor fusion and AI-driven programming. Their system combines data from lidar, radar, and cameras to create a holistic understanding of the environment, predicting the movement of objects around the vehicle in real-time. The more miles a self-driving system logs, the more data it accumulates. This continuous learning is crucial; it refines the deep learning algorithms, enabling the car to make increasingly nuanced and sophisticated driving decisions.

Let’s break down the operational steps of a Waymo vehicle to understand the programming in action:

- Destination Input & Route Calculation: The journey begins when a passenger sets a destination. The car’s software, the core of its programming, calculates the optimal route using mapping data and real-time traffic information.

- 3D Environmental Mapping with Lidar: A roof-mounted, rotating lidar sensor scans a 60-meter radius around the car, generating a dynamic 3D map of the immediate environment. This provides precise spatial awareness.

- Positioning System: A sensor on the rear wheel tracks sideways movement, precisely positioning the car within the 3D map created by the lidar. This ensures accurate localization.

- Obstacle Detection with Radar: Radar systems in the bumpers measure distances to obstacles, complementing the lidar’s 3D mapping with robust object detection, especially in adverse weather.

- AI-Powered Sensor Fusion and Data Processing: The AI software acts as the central processing unit, integrating data from all sensors, including Google Street View data and internal cameras. This fusion provides a comprehensive and contextual understanding of the driving scene.

- Deep Learning for Decision-Making: The AI utilizes deep learning algorithms to mimic human perception and decision-making. It controls the car’s driving systems – steering, acceleration, and braking – based on learned patterns and real-time sensor data. This is where the core programming translates data into action.

- Navigation Augmentation with Google Maps: The software consults Google Maps for advanced information on landmarks, traffic signs, and traffic lights, enhancing navigation and anticipation of road conditions.

- Human Override Capability: An essential safety feature is the override function, allowing a human to take immediate control of the vehicle in unforeseen circumstances. This ensures a safety net in the current stages of autonomous technology.

Programming for Varying Levels of Autonomy

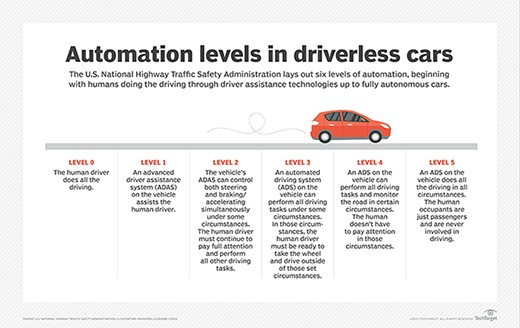

While Waymo represents a high degree of autonomy, many vehicles on the road today incorporate self-driving features at varying levels. The Society of Automotive Engineers (SAE) defines six levels of driving automation, each requiring different degrees of programming complexity:

- Level 0: No Automation: The programming in the car is limited to basic functions; the human driver controls everything.

- Level 1: Driver Assistance: The vehicle can assist with steering OR acceleration/braking (e.g., adaptive cruise control). Programming focuses on specific assistance features while the driver remains fully engaged.

- Level 2: Partial Automation: The vehicle can control steering AND acceleration/braking simultaneously (e.g., lane centering and adaptive cruise control). Programming becomes more complex, coordinating multiple automated functions, but driver vigilance is still critical.

- Level 3: Conditional Automation: The vehicle can perform all driving tasks in specific scenarios (e.g., highway driving). Programming must handle conditional autonomy, managing driving tasks in defined ODDs and safely transitioning control back to the driver when necessary.

- Level 4: High Automation: The vehicle can self-drive in certain scenarios without driver intervention. Programming is significantly more advanced, enabling autonomous operation within specific environments, but may still have limitations.

- Level 5: Full Automation: The vehicle can self-drive under all conditions without any human input. This represents the pinnacle of autonomous programming, requiring systems that can handle any driving scenario a human driver could encounter.

The six levels of automation for driverless cars.

The six levels of automation for driverless cars.

Currently, car manufacturers have primarily reached Level 4 autonomy in controlled environments. Programming for each level demands increasing sophistication in sensor integration, AI algorithms, decision-making logic, and safety protocols. The jump from Level 4 to Level 5, full autonomy, is particularly challenging, requiring programming that can handle the vast complexity and unpredictability of real-world driving scenarios with human-level competence or better.

Programming Challenges and the Future of Driverless Car Code

Despite the remarkable progress, programming driverless cars still faces significant hurdles. These include:

- Edge Cases and Unpredictability: Programming needs to account for countless unexpected situations – unusual weather, road debris, erratic pedestrian behavior, and novel scenarios. Creating algorithms that can generalize and react safely to unforeseen events is a major challenge.

- Sensor Limitations: Sensors can be affected by weather conditions (heavy rain, snow, fog) or obstruction, impacting data accuracy. Programming must incorporate redundancy and fallback mechanisms to maintain safety when sensor data is compromised.

- Ethical Dilemmas in Programming: Autonomous vehicles may face situations requiring ethical decisions (the classic trolley problem scenario). Programming these ethical frameworks into AI decision-making is a complex and debated area.

- Cybersecurity: Ensuring the security of the vast software and communication systems in driverless cars is paramount. Programming must be robust against hacking and malicious attacks to prevent catastrophic failures.

- Validation and Testing: Thoroughly testing and validating the millions of lines of code in autonomous driving systems is incredibly complex. Developing robust testing methodologies to ensure safety and reliability across all possible scenarios is crucial.

The future of driverless car programming lies in advancing AI algorithms, improving sensor technology, creating more robust and adaptable software architectures, and establishing comprehensive safety and ethical guidelines. As developers continue to refine the code that powers these vehicles, the dream of safe, efficient, and universally accessible autonomous transportation moves closer to reality. The journey to fully driverless cars is fundamentally a journey of advancing the art and science of programming, pushing the boundaries of what AI can achieve in the real world.