For anyone involved in medical education, especially within internal medicine, the question of how we measure physician competency is paramount. Residency programs utilize various evaluation methods, most notably milestone ratings and the American Board of Internal Medicine (ABIM) certification examination. But do these metrics truly reflect a physician’s ability to care for patients? And more specifically, Do Internal Medicine Programs Care About Scores from these evaluations, and should they?

A recent study delved into this critical question, examining the relationship between internal medicine residents’ performance on milestone ratings and certification exams, and the outcomes of their hospitalized patients. This article will unpack the findings of this research, explore the implications for internal medicine programs, and shed light on whether internal medicine programs should care about scores and which scores truly matter.

Milestone Ratings vs. Certification Exams: Two Sides of the Competency Coin

Internal medicine residency programs in the US employ two primary methods to assess the competence of their trainees. The first, milestone ratings, were introduced in 2013 by the Accreditation Council for Graduate Medical Education (ACGME) and the American Board of Medical Specialties (ABMS). These milestones were designed to create a standardized, systematic approach to evaluating a resident’s progress towards competency throughout their training.

The impetus behind milestones was to address perceived weaknesses in previous assessment methods. These earlier methods often lacked clear performance expectations, relied heavily on subjective program director evaluations, lacked standardized criteria, and didn’t consistently promote competence improvement during residency. The milestone framework centers around six core competencies: patient care, medical knowledge, practice-based learning and improvement, systems-based practice, interpersonal and communication skills, and professionalism. Each competency is further broken down into sub-competencies, providing a detailed framework for feedback and evaluation throughout residency.

The second major assessment tool is the ABIM internal medicine certification examination. This exam, typically taken soon after residency completion, is designed to evaluate clinical judgment based on medical knowledge. Unlike milestone ratings, the certification exam doesn’t involve direct observation of residents in clinical settings. Instead, it utilizes scenario-based questions developed by practicing physicians to simulate real-world clinical decision-making.

Given the significance of both milestone ratings and board certification for newly trained internists, surprisingly little research exists on how these evaluations correlate with actual patient outcomes. This study aimed to bridge this gap by investigating the association between both milestone ratings and ABIM certification exam scores with hospital outcomes for patients treated by newly trained hospitalists.

Study Design: Linking Physician Evaluations to Hospital Outcomes

This research employed a retrospective cohort analysis of 6,898 hospitalists who completed their internal medicine training between 2016 and 2018. The study focused on Medicare fee-for-service beneficiaries hospitalized between 2017 and 2019, who were cared for by these newly trained hospitalists.

To ensure a robust analysis, several measures were taken to refine the study population. Elective hospitalizations and patients in hospice care were excluded. A hospitalist was attributed to a hospitalization if they provided the majority of inpatient evaluation and management services during the stay. The study further focused on hospitalists in their first few years post-residency, practicing in acute short-stay hospitals with at least 100 beds, and caring for patients within the first three days of admission – the period where physician influence on outcomes is most pronounced.

The primary outcomes measured were 7-day post-admission mortality and 7-day post-discharge readmission rates. These short-term outcomes were chosen to reflect the quality of care delivered during hospitalization, minimizing the influence of pre-existing conditions or social factors. Secondary outcomes included 30-day mortality and readmission rates, length of hospital stay, and frequency of subspecialist consultations.

Physician competency was assessed using two primary metrics: milestone ratings and certification exam scores. Milestone ratings included an overall core competency rating (average of six core competency ratings) and a medical knowledge core competency rating. These were categorized as low, medium, or high based on the mean of end-of-residency milestone sub-competency ratings. Certification exam scores were represented as yearly quartiles based on the scores of all physicians taking the exam for the first time in a given year.

Statistical analysis was rigorous, utilizing hospital fixed effects to account for hospital-level variations in quality and adjusting for patient characteristics, physician experience, and year of hospitalization. This approach allowed for a comparison of physician performance within the same hospital setting, minimizing confounding factors.

Key Findings: Certification Scores Matter, Milestone Ratings Less So

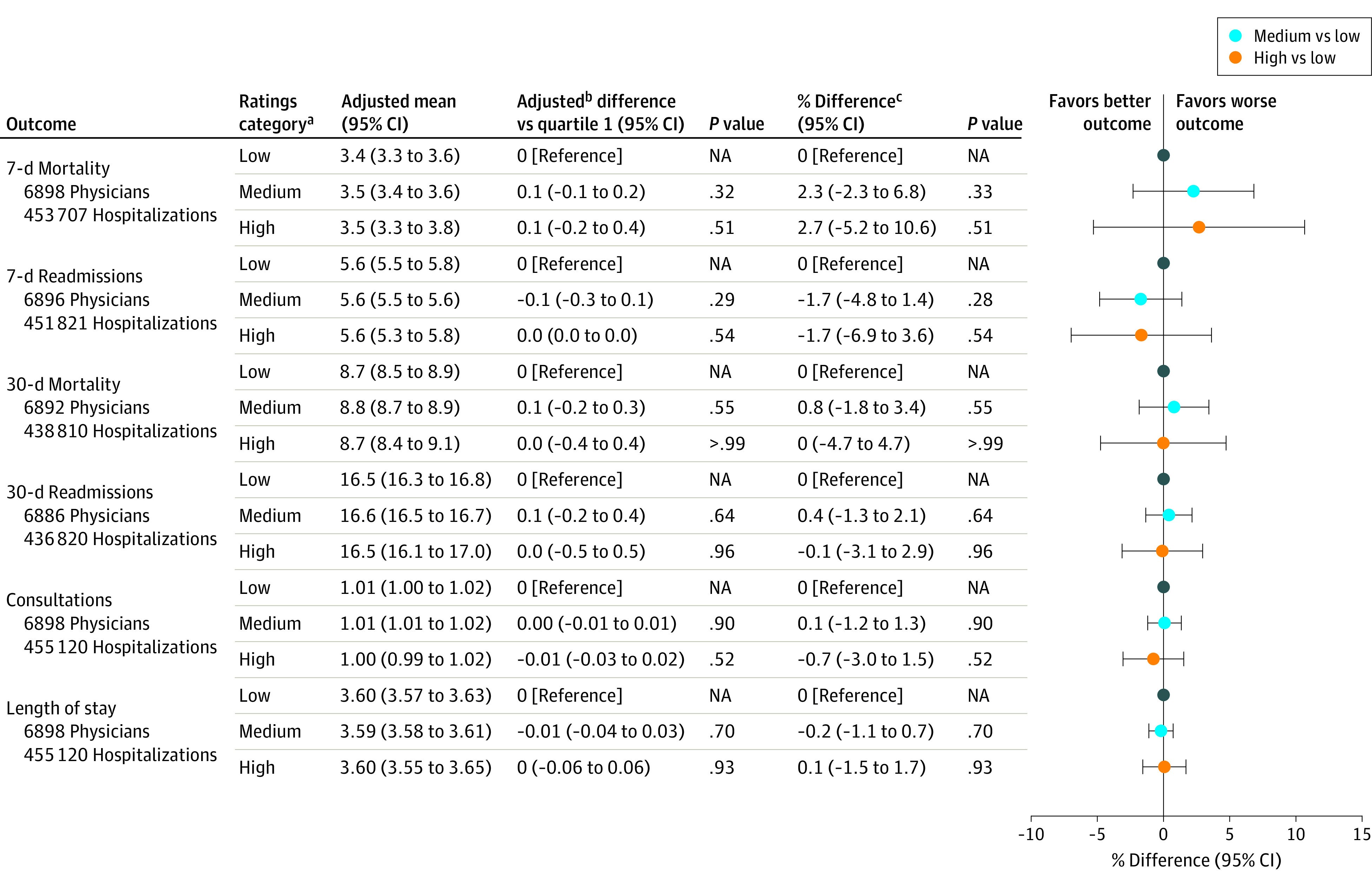

The study’s findings revealed a striking difference between the two evaluation methods. Milestone ratings, whether overall or specifically for medical knowledge, showed no significant association with any of the measured hospital outcomes. For instance, patients treated by hospitalists with high overall core competency ratings did not experience significantly different 7-day mortality or readmission rates compared to those treated by hospitalists with low ratings. In fact, the study noted a non-significant increase in 7-day mortality associated with high overall competency ratings, a finding that underscores the lack of correlation.

Figure 2. Bar chart displaying the adjusted percentage difference in patient outcomes for hospitalists categorized by overall core competency ratings (low, medium, high). Outcomes include 7-day mortality, 7-day readmission, 30-day mortality, 30-day readmission, length of stay, and consultation frequency. No statistically significant association was observed between overall core competency ratings and patient outcomes.

In stark contrast, certification examination scores demonstrated a significant association with patient outcomes. Hospitalists in the top quartile of exam scores showed an 8.0% reduction in 7-day mortality rates and a 9.3% reduction in 7-day readmission rates compared to those in the bottom quartile. The benefit extended to 30-day mortality as well, with a significant 3.5% reduction observed. Furthermore, higher exam scores were associated with a slight increase in subspecialist consultations, suggesting potentially better utilization of specialist expertise.

Figure 4. Bar chart illustrating the adjusted percentage difference in patient outcomes for hospitalists categorized by certification examination score quartiles (bottom, second, third, top). Outcomes include 7-day mortality, 7-day readmission, 30-day mortality, 30-day readmission, length of stay, and consultation frequency. Top quartile certification exam scores were associated with significantly reduced 7-day mortality and 7-day readmission rates.

These findings remained consistent across various sensitivity analyses, including adjustments for different physician attribution methods, control variables, and using continuous measures of ratings and exam scores. The robustness of these results strengthens the conclusion that certification exam scores, but not milestone ratings, are associated with tangible differences in patient outcomes.

Why the Discrepancy? Implications for Internal Medicine Programs

The study’s findings raise a crucial question: why do certification exam scores correlate with patient outcomes while milestone ratings do not? Several potential explanations emerge.

Milestone ratings, while intended to provide comprehensive feedback, may suffer from subjectivity and a lack of standardization across programs. Evaluations might be influenced by factors beyond pure competency, such as personal relationships or a reluctance to give lower ratings, especially as residents approach graduation. The study itself noted a mismatch, with a substantial proportion of physicians rated as “low” in overall competency still achieving high certification exam scores, and vice versa. This suggests that milestone ratings may not be consistently capturing the same aspects of physician competency that are reflected in exam performance.

Furthermore, milestone ratings, by their nature, assess a broad range of competencies, some of which may be less directly linked to immediate patient outcomes like mortality and readmission. While professionalism and communication skills are undoubtedly important, medical knowledge and clinical judgment, heavily tested in certification exams, might be more directly impactful in acute hospital settings.

Certification exams, on the other hand, are standardized, objective measures of medical knowledge and clinical reasoning. They are designed by experts to reflect real-world clinical scenarios and assess a physician’s ability to apply medical knowledge to patient care. The study’s findings suggest that this knowledge base, as measured by the certification exam, does translate into improved patient outcomes, particularly in the short-term.

So, do internal medicine programs care about scores? The answer, based on this research, should be a resounding yes, particularly when it comes to certification exam scores. While milestone ratings serve a valuable purpose in resident development and feedback, their lack of correlation with patient outcomes suggests they may not be the most reliable indicator of future physician performance in terms of patient-centered metrics.

For internal medicine programs, these findings have several important implications:

- Re-evaluate the role of milestone ratings: Programs should critically assess how milestone ratings are used and interpreted. While they remain valuable for formative feedback and resident development, their limitations as predictors of patient outcomes should be acknowledged. Efforts to improve the objectivity and standardization of milestone assessments may be warranted.

- Emphasize preparation for certification exams: Given the clear link between certification exam scores and patient outcomes, programs should ensure residents are adequately prepared for these exams. This includes robust medical knowledge training, clinical reasoning skill development, and potentially incorporating standardized exam performance data into resident evaluations and feedback.

- Consider incorporating standardized exam metrics into program evaluation: Program directors might consider using aggregate certification exam performance as one metric for evaluating program effectiveness. While not the sole indicator, it can provide valuable data on how well a program prepares residents for independent practice and ultimately, for delivering high-quality patient care.

- Further research needed: This study provides valuable insights, but further research is needed to explore the nuances of physician competency assessment. Future studies could investigate the reasons behind the discrepancy between milestone ratings and exam scores, explore the impact of the revised milestone system implemented in 2021, and examine the long-term implications of exam performance on patient outcomes and career trajectories.

Conclusion: Scores as Signals of Quality

This study provides compelling evidence that, among newly trained hospitalists, certification examination scores, but not residency milestone ratings, are associated with improved outcomes for hospitalized Medicare beneficiaries. This suggests that while internal medicine programs should continue to utilize milestone ratings for resident development, they should also care deeply about scores on standardized certification exams as a more reliable signal of physician competency related to patient outcomes. Incorporating standardized exam performance more formally into residency program evaluations and resident feedback may be a crucial step towards enhancing the quality of internal medicine training and ultimately, improving patient care.

Educational Objective Insight: This study highlights the critical insight that while both milestone ratings and certification exams are used to evaluate internal medicine residents, certification exam scores appear to be a more reliable predictor of patient outcomes. This suggests that internal medicine programs should pay close attention to standardized exam performance as a meaningful metric of physician competency and its impact on patient care.